How Many Elements Can Be Stored in an Array of Dimension 2 by 3?

In computer science, an array data construction, or simply an array, is a information construction consisting of a collection of elements (values or variables), each identified by at to the lowest degree one array index or central. An array is stored such that the position of each element can exist computed from its index tuple by a mathematical formula.[1] [2] [three] The simplest type of information structure is a linear array, also called one-dimensional array.

For instance, an array of ten 32-scrap (4-byte) integer variables, with indices 0 through 9, may be stored as 10 words at memory addresses 2000, 2004, 2008, ..., 2036, (in hexadecimal: 0x7D0, 0x7D4, 0x7D8, ..., 0x7F4) so that the element with index i has the accost 2000 + (i × four).[four]

The retention address of the first element of an array is chosen starting time address, foundation accost, or base address.

Because the mathematical concept of a matrix tin can exist represented as a two-dimensional filigree, two-dimensional arrays are as well sometimes called matrices. In some cases the term "vector" is used in computing to refer to an array, although tuples rather than vectors are the more mathematically right equivalent. Tables are oftentimes implemented in the grade of arrays, specially lookup tables; the word table is sometimes used as a synonym of array.

Arrays are amidst the oldest and virtually important information structures, and are used by near every program. They are likewise used to implement many other data structures, such as lists and strings. They effectively exploit the addressing logic of computers. In most modern computers and many external storage devices, the retention is a i-dimensional array of words, whose indices are their addresses. Processors, especially vector processors, are often optimized for assortment operations.

Arrays are useful mostly because the element indices can exist computed at run time. Among other things, this feature allows a unmarried iterative statement to process arbitrarily many elements of an array. For that reason, the elements of an array data structure are required to have the same size and should apply the same data representation. The set of valid index tuples and the addresses of the elements (and hence the element addressing formula) are ordinarily,[3] [five] but non always,[2] fixed while the array is in use.

The term array is often used to mean array data type, a kind of data type provided by most loftier-level programming languages that consists of a collection of values or variables that can be selected by one or more indices computed at run-time. Array types are often implemented by assortment structures; however, in some languages they may exist implemented by hash tables, linked lists, search trees, or other information structures.

The term is also used, specially in the clarification of algorithms, to hateful associative array or "abstract assortment", a theoretical calculator science model (an abstract data type or ADT) intended to capture the essential properties of arrays.

History [edit]

The showtime digital computers used motorcar-language programming to gear up and access assortment structures for information tables, vector and matrix computations, and for many other purposes. John von Neumann wrote the beginning array-sorting programme (merge sort) in 1945, during the building of the start stored-plan computer.[six] p. 159 Array indexing was originally done by self-modifying code, and afterward using index registers and indirect addressing. Some mainframes designed in the 1960s, such as the Burroughs B5000 and its successors, used retentivity segmentation to perform index-bounds checking in hardware.[7]

Associates languages generally have no special support for arrays, other than what the machine itself provides. The primeval loftier-level programming languages, including FORTRAN (1957), Lisp (1958), COBOL (1960), and ALGOL 60 (1960), had support for multi-dimensional arrays, and so has C (1972). In C++ (1983), class templates exist for multi-dimensional arrays whose dimension is fixed at runtime[3] [five] as well as for runtime-flexible arrays.[2]

Applications [edit]

Arrays are used to implement mathematical vectors and matrices, equally well as other kinds of rectangular tables. Many databases, pocket-sized and large, consist of (or include) one-dimensional arrays whose elements are records.

Arrays are used to implement other data structures, such as lists, heaps, hash tables, deques, queues, stacks, strings, and VLists. Array-based implementations of other data structures are frequently simple and infinite-efficient (implicit data structures), requiring little space overhead, just may take poor space complexity, specially when modified, compared to tree-based data structures (compare a sorted array to a search tree).

One or more than large arrays are sometimes used to emulate in-program dynamic retention allocation, particularly memory pool allocation. Historically, this has sometimes been the only style to allocate "dynamic memory" portably.

Arrays can be used to determine partial or complete control menstruum in programs, as a compact alternative to (otherwise repetitive) multiple IF statements. They are known in this context as command tables and are used in conjunction with a purpose built interpreter whose control flow is altered according to values contained in the assortment. The array may contain subroutine pointers (or relative subroutine numbers that can be acted upon by SWITCH statements) that direct the path of the execution.

Element identifier and addressing formulas [edit]

When data objects are stored in an array, individual objects are selected by an index that is usually a not-negative scalar integer. Indexes are also called subscripts. An index maps the array value to a stored object.

At that place are three ways in which the elements of an array can be indexed:

- 0 (zero-based indexing)

- The first element of the array is indexed by subscript of 0.[8]

- ane (one-based indexing)

- The first element of the array is indexed past subscript of 1.

- n (n-based indexing)

- The base index of an array can exist freely called. Usually programming languages allowing north-based indexing also let negative alphabetize values and other scalar data types like enumerations, or characters may be used as an assortment alphabetize.

Using null based indexing is the design choice of many influential programming languages, including C, Java and Lisp. This leads to simpler implementation where the subscript refers to an offset from the starting position of an assortment, so the first element has an offset of zero.

Arrays can have multiple dimensions, thus it is not uncommon to access an array using multiple indices. For example, a ii-dimensional array A with three rows and four columns might provide access to the element at the 2nd row and 4th column by the expression A[ane][3] in the case of a nothing-based indexing system. Thus two indices are used for a two-dimensional array, iii for a three-dimensional array, and n for an due north-dimensional array.

The number of indices needed to specify an chemical element is chosen the dimension, dimensionality, or rank of the array.

In standard arrays, each index is restricted to a certain range of consecutive integers (or sequent values of some enumerated type), and the address of an element is computed past a "linear" formula on the indices.

One-dimensional arrays [edit]

A one-dimensional array (or single dimension array) is a blazon of linear assortment. Accessing its elements involves a unmarried subscript which can either represent a row or column alphabetize.

Equally an example consider the C proclamation int anArrayName[x]; which declares a one-dimensional assortment of x integers. Here, the array tin store 10 elements of blazon int . This array has indices starting from goose egg through nine. For instance, the expressions anArrayName[0] and anArrayName[9] are the first and last elements respectively.

For a vector with linear addressing, the element with index i is located at the address B + c × i , where B is a fixed base address and c a fixed constant, sometimes chosen the address increase or stride.

If the valid element indices begin at 0, the constant B is simply the address of the first element of the array. For this reason, the C programming language specifies that array indices always begin at 0; and many programmers volition call that element "zeroth" rather than "starting time".

However, one can cull the index of the kickoff element past an appropriate option of the base address B. For example, if the array has 5 elements, indexed 1 through five, and the base address B is replaced by B + 30c , then the indices of those same elements will be 31 to 35. If the numbering does not start at 0, the constant B may non exist the address of any element.

Multidimensional arrays [edit]

For a multidimensional array, the chemical element with indices i,j would have address B + c · i + d · j, where the coefficients c and d are the row and cavalcade address increments, respectively.

More generally, in a g-dimensional assortment, the address of an element with indices i ane, i two, ..., i k is

- B + c 1 · i i + c 2 · i 2 + … + c k · i k .

For example: int a[2][3];

This means that array a has 2 rows and 3 columns, and the array is of integer type. Here we tin can store 6 elements they volition be stored linearly only starting from starting time row linear then continuing with second row. The above assortment volition be stored as axi, a12, a13, a21, a22, a23.

This formula requires only k multiplications and k additions, for whatsoever array that can fit in retentivity. Moreover, if any coefficient is a stock-still power of 2, the multiplication can be replaced by scrap shifting.

The coefficients c k must be called so that every valid index tuple maps to the address of a distinct chemical element.

If the minimum legal value for every alphabetize is 0, then B is the address of the element whose indices are all null. As in the ane-dimensional case, the element indices may be changed by changing the base of operations address B. Thus, if a ii-dimensional assortment has rows and columns indexed from i to x and 1 to twenty, respectively, and so replacing B by B + c i − iiic ii will cause them to be renumbered from 0 through 9 and 4 through 23, respectively. Taking advantage of this feature, some languages (like FORTRAN 77) specify that array indices begin at 1, as in mathematical tradition while other languages (similar Fortran 90, Pascal and Algol) allow the user choose the minimum value for each alphabetize.

Dope vectors [edit]

The addressing formula is completely defined by the dimension d, the base address B, and the increments c one, c 2, ..., c thou . Information technology is ofttimes useful to pack these parameters into a record called the array's descriptor or footstep vector or dope vector.[2] [iii] The size of each chemical element, and the minimum and maximum values allowed for each index may as well be included in the dope vector. The dope vector is a complete handle for the array, and is a convenient way to pass arrays every bit arguments to procedures. Many useful array slicing operations (such as selecting a sub-assortment, swapping indices, or reversing the management of the indices) can be performed very efficiently by manipulating the dope vector.[2]

Compact layouts [edit]

Ofttimes the coefficients are chosen so that the elements occupy a face-to-face area of memory. Nonetheless, that is not necessary. Even if arrays are always created with contiguous elements, some array slicing operations may create not-contiguous sub-arrays from them.

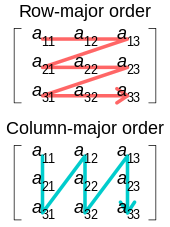

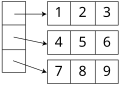

Illustration of row- and column-major order

There are 2 systematic compact layouts for a two-dimensional array. For case, consider the matrix

In the row-major guild layout (adopted by C for statically alleged arrays), the elements in each row are stored in consecutive positions and all of the elements of a row have a lower address than any of the elements of a consecutive row:

-

1 2 3 4 5 6 7 8 9

In column-major club (traditionally used by Fortran), the elements in each cavalcade are consecutive in memory and all of the elements of a cavalcade have a lower accost than any of the elements of a consecutive column:

-

1 4 seven 2 five 8 3 6 9

For arrays with three or more indices, "row major order" puts in consecutive positions any two elements whose alphabetize tuples differ only by i in the last alphabetize. "Column major lodge" is analogous with respect to the first index.

In systems which employ processor cache or virtual memory, scanning an array is much faster if successive elements are stored in consecutive positions in retentiveness, rather than sparsely scattered. Many algorithms that utilize multidimensional arrays will scan them in a anticipated social club. A programmer (or a sophisticated compiler) may use this information to cull between row- or column-major layout for each assortment. For example, when computing the product A·B of two matrices, it would be all-time to have A stored in row-major order, and B in cavalcade-major order.

Resizing [edit]

Static arrays accept a size that is fixed when they are created and consequently practice not allow elements to be inserted or removed. Withal, by allocating a new array and copying the contents of the old array to it, it is possible to finer implement a dynamic version of an array; see dynamic assortment. If this operation is washed infrequently, insertions at the end of the array crave only amortized constant time.

Some array data structures do non reallocate storage, but do store a count of the number of elements of the array in apply, called the count or size. This effectively makes the array a dynamic assortment with a fixed maximum size or capacity; Pascal strings are examples of this.

Not-linear formulas [edit]

More complicated (non-linear) formulas are occasionally used. For a compact two-dimensional triangular assortment, for instance, the addressing formula is a polynomial of degree 2.

Efficiency [edit]

Both store and select take (deterministic worst instance) constant time. Arrays have linear (O(northward)) space in the number of elements n that they hold.

In an array with element size one thousand and on a automobile with a cache line size of B bytes, iterating through an array of north elements requires the minimum of ceiling(nk/B) enshroud misses, because its elements occupy contiguous memory locations. This is roughly a factor of B/g better than the number of enshroud misses needed to access due north elements at random retentivity locations. As a consequence, sequential iteration over an array is noticeably faster in practice than iteration over many other data structures, a property chosen locality of reference (this does non hateful yet, that using a perfect hash or trivial hash inside the aforementioned (local) array, volition non be even faster - and achievable in constant fourth dimension). Libraries provide low-level optimized facilities for copying ranges of retentivity (such as memcpy) which tin be used to motion contiguous blocks of array elements significantly faster than can be accomplished through private element access. The speedup of such optimized routines varies by array chemical element size, architecture, and implementation.

Retentivity-wise, arrays are meaty data structures with no per-element overhead. There may be a per-array overhead (e.g., to shop index premises) merely this is linguistic communication-dependent. It can also happen that elements stored in an array require less memory than the same elements stored in private variables, because several array elements can be stored in a unmarried word; such arrays are often called packed arrays. An extreme (but commonly used) case is the chip assortment, where every bit represents a single element. A single octet can thus hold up to 256 different combinations of up to viii different conditions, in the near compact grade.

Assortment accesses with statically predictable admission patterns are a major source of data parallelism.

Comparison with other information structures [edit]

| Summit | Mutate (insert or delete) at … | Backlog space, average | |||

|---|---|---|---|---|---|

| Beginning | End | Middle | |||

| Linked listing | Θ(north) | Θ(1) | Θ(i), known stop element; Θ(north), unknown end element | Peak time + Θ(1)[9] [ten] | Θ(north) |

| Array | Θ(1) | Northward/A | N/A | Due north/A | 0 |

| Dynamic array | Θ(1) | Θ(n) | Θ(ane) amortized | Θ(due north) | Θ(n)[xi] |

| Balanced tree | Θ(log n) | Θ(log n) | Θ(log n) | Θ(log n) | Θ(n) |

| Random-access list | Θ(log n)[12] | Θ(1) | N/A[12] | Due north/A[12] | Θ(northward) |

| Hashed array tree | Θ(1) | Θ(due north) | Θ(1) amortized | Θ(northward) | Θ(√n) |

Dynamic arrays or growable arrays are like to arrays but add together the ability to insert and delete elements; adding and deleting at the finish is particularly efficient. Yet, they reserve linear (Θ(n)) boosted storage, whereas arrays do not reserve additional storage.

Associative arrays provide a mechanism for array-like functionality without huge storage overheads when the index values are sparse. For instance, an array that contains values only at indexes ane and 2 billion may benefit from using such a structure. Specialized associative arrays with integer keys include Patricia tries, Judy arrays, and van Emde Boas trees.

Balanced trees require O(log due north) time for indexed admission, but likewise allow inserting or deleting elements in O(log north) time,[thirteen] whereas growable arrays require linear (Θ(north)) time to insert or delete elements at an capricious position.

Linked lists allow constant time removal and insertion in the eye simply take linear time for indexed access. Their memory utilise is typically worse than arrays, but is yet linear.

An Iliffe vector is an culling to a multidimensional array construction. Information technology uses a one-dimensional array of references to arrays of ane dimension less. For ii dimensions, in item, this alternative structure would be a vector of pointers to vectors, i for each row(pointer on c or c++). Thus an element in row i and column j of an array A would be accessed by double indexing (A[i][j] in typical note). This alternative structure allows jagged arrays, where each row may take a different size—or, in general, where the valid range of each alphabetize depends on the values of all preceding indices. It too saves one multiplication (by the cavalcade address increment) replacing it by a bit shift (to index the vector of row pointers) and 1 extra memory access (fetching the row address), which may exist worthwhile in some architectures.

Dimension [edit]

The dimension of an array is the number of indices needed to select an element. Thus, if the array is seen as a role on a gear up of possible index combinations, it is the dimension of the infinite of which its domain is a discrete subset. Thus a one-dimensional array is a list of information, a two-dimensional array is a rectangle of information,[xiv] a three-dimensional array a block of data, etc.

This should non exist confused with the dimension of the gear up of all matrices with a given domain, that is, the number of elements in the array. For example, an array with 5 rows and four columns is two-dimensional, but such matrices course a 20-dimensional space. Similarly, a three-dimensional vector tin exist represented by a i-dimensional array of size 3.

See also [edit]

- Dynamic array

- Parallel assortment

- Variable-length array

- Bit array

- Assortment slicing

- Offset (information science)

- Row- and cavalcade-major society

- Footstep of an assortment

References [edit]

- ^ Black, Paul Eastward. (13 Nov 2008). "array". Dictionary of Algorithms and Data Structures. National Institute of Standards and Engineering. Retrieved 22 August 2010.

- ^ a b c d e Bjoern Andres; Ullrich Koethe; Thorben Kroeger; Hamprecht (2010). "Runtime-Flexible Multi-dimensional Arrays and Views for C++98 and C++0x". arXiv:1008.2909 [cs.DS].

- ^ a b c d Garcia, Ronald; Lumsdaine, Andrew (2005). "MultiArray: a C++ library for generic programming with arrays". Software: Practice and Experience. 35 (ii): 159–188. doi:ten.1002/spe.630. ISSN 0038-0644. S2CID 10890293.

- ^ David R. Richardson (2002), The Volume on Data Structures. iUniverse, 112 pages. ISBN 0-595-24039-9, ISBN 978-0-595-24039-v.

- ^ a b Veldhuizen, Todd L. (December 1998). Arrays in Blitz++ (PDF). Computing in Object-Oriented Parallel Environments. Lecture Notes in Computer science. Vol. 1505. Springer Berlin Heidelberg. pp. 223–230. doi:x.1007/3-540-49372-7_24. ISBN978-3-540-65387-5. Archived from the original (PDF) on 9 November 2016.

- ^ Donald Knuth, The Art of Reckoner Programming, vol. 3. Addison-Wesley

- ^ Levy, Henry Grand. (1984), Capability-based Figurer Systems, Digital Press, p. 22, ISBN9780932376220 .

- ^ "Assortment Code Examples - PHP Array Functions - PHP code". Computer Programming Web programming Tips. Archived from the original on xiii April 2011. Retrieved 8 Apr 2011.

In nearly reckoner languages array index (counting) starts from 0, not from 1. Index of the get-go element of the array is 0, alphabetize of the second element of the array is 1, and and so on. In array of names beneath y'all can encounter indexes and values.

- ^ Day 1 Keynote - Bjarne Stroustrup: C++11 Style at GoingNative 2012 on channel9.msdn.com from minute 45 or foil 44

- ^ Number crunching: Why you should never, ever, Ever utilize linked-list in your code once again at kjellkod.wordpress.com

- ^ Brodnik, Andrej; Carlsson, Svante; Sedgewick, Robert; Munro, JI; Demaine, ED (1999), Resizable Arrays in Optimal Time and Infinite (Technical Report CS-99-09) (PDF), Department of Computer Science, Academy of Waterloo

- ^ a b c Chris Okasaki (1995). "Purely Functional Random-Access Lists". Proceedings of the 7th International Briefing on Functional Programming Languages and Computer Compages: 86–95. doi:ten.1145/224164.224187.

- ^ "Counted B-Trees".

- ^ "2-Dimensional Arrays \ Processing.org". processing.org . Retrieved 1 May 2020.

External links [edit]

| | Look up array in Wiktionary, the costless lexicon. |

-

Data Structures/Arrays at Wikibooks

Data Structures/Arrays at Wikibooks

Source: https://en.wikipedia.org/wiki/Array_data_structure#:~:text=For%20example%3A%20int%20a%5B2,%2C%20a22%2C%20a23.

Post a Comment for "How Many Elements Can Be Stored in an Array of Dimension 2 by 3?"